My Other Work

Here’s a collection of miscellaneous projects I’ve worked on, or other areas of development that I have interest in.

Sound Design

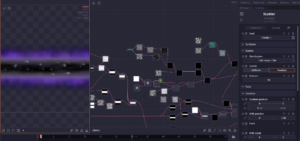

One of my personal favourite parts of development (and one of my hobbies in general) is audio design – I am very well versed in creating sound effects and soundscapes for video game environments, and I take commissions from people needing audio for their video game and modding projects. I also use these same skills to make music in my own time.

To this end, I have collected a range of hardware and software as a part of my studio to help with my sound endeavours, such as:

Various software editors and DAWs as my main platforms to develop audio in, including REAPER, Sound Forge, FL Studio, and Audacity.

Mixers, audio interfaces, studio monitors, headphones, MIDI keyboards, to help with audio production.

Synthesizers, both hardware (physical units that I feed through mixers and interfaces to record from) and software (plugins that run inside DAWs such as REAPER).

Various plugins to provide effects to change audio as needed (reverb, phaser, EQ, etc).

A well-used and heavily appreciated Zoom H2N, acting as my main microphone for recording my own sounds (such as for custom Foley – pages turning, cloth rustling, footsteps, etc.)

A large collection of sound and sample libraries to draw additional audio material from, accumulated over the course of several years.

Want to hear some of the things I’ve made? Check the demos below!

Modelling, Art, and Texturing

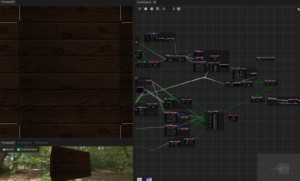

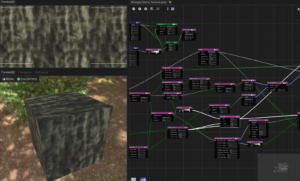

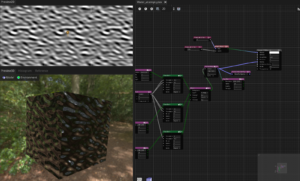

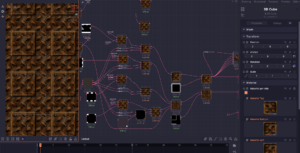

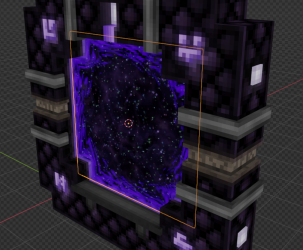

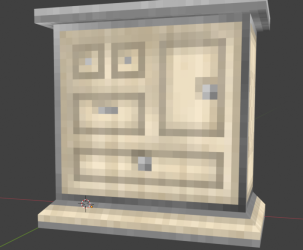

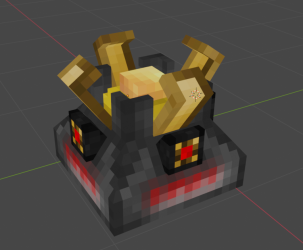

As shown in the VR Whac-a-Mole section, I’m well-versed in using Blender for modelling custom assets for games. In fact, I have used Blender previously for a few different projects, such as for some simple rendering, creating custom shaders and textures, and low-poly modelling for some modding projects. This includes very low-poly work made in Blockbench to create deliberately low-resolution models, which are often used for Minecraft mods!

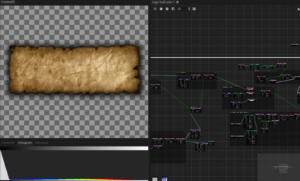

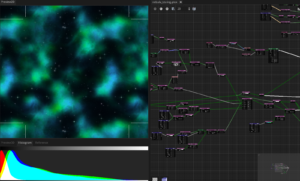

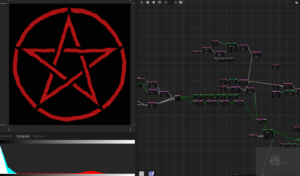

Alongside 3D graphics work, I’m also familiar with a 2D work too. This includes creating textures with Material Maker (for flat surfaces, for texturing 3D objects I mostly use Blender’s shader editor), an open-source alternative to software such as Substance Designer, basic image editing using Photoshop/GIMP/Photopea, and pixel art using Pixel Composer.

I generally prefer using non-destructive and/or node-based workflows, and programs such as Blender, Material Maker or Pixel Composer enable this style of creation very effectively. Elements can be procedurally generated using different types of noise, blended together, colourised freely, warped/manipulated and then output for a final result. Then, if at any point I feel something needs to be tweaked, I can go back along the chain and change any step and the subsequent steps will still work!

Furthermore, thanks to the programs I use either being open-source or easy to customize, I often make custom nodes for these programs that contain filters or shaders of my own design; these can be made either using node setups, or are programmed using languages such as Lua, HLSL, or GLSL.

See below for examples of things I’ve made!

Material Maker

Pixel Composer

Blender

Motion Capture BEng Project

For my previous studies during my BEng in Electronic Engineering, my third year final dissertation project was something actually very closely related to games development – the creation and evaluation of a motion capture system.

This system, using OptiTrack hardware and software, was designed for use in medical diagnostics – by capturing and recording a patient’s gait, this captured motion could be fed into machine learning systems that would attempt to diagnose medical problems. The initial systems used for this involved Microsoft Kinect cameras, but a few of us students were tasked with creating a more complex system that could provide more accurate motion capture to use as reference data to compare Kinect data against.

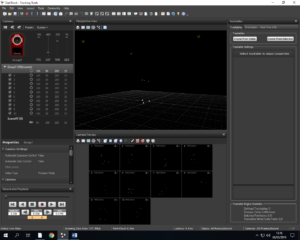

To that end, we were provided with OptiTrack cameras, a room to place them in around a central treadmill, some scaffolding, and a collection of markers and calibration tools – our task was then to figure out how to put all these pieces together into a complete motion capture system.

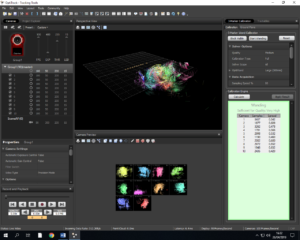

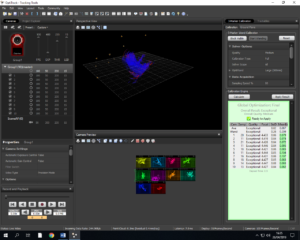

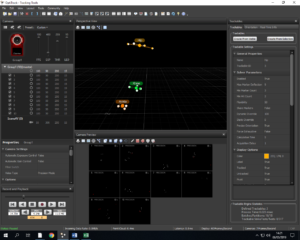

With that in mind, after a lot of research and tinkering, we ended up connecting a selection of OptiTrack Flex cameras to a PC placed around the treadmill the tracked subject would walk on (attached to some scaffolding placed above it). These cameras were then connected to OptiTrack Tracking Tools, calibrated by using a calibration wand (i.e. a stick with reflective markers on it was waved around a lot), and with some further tweaking the software would then know where to place the cameras in a virtual space. From there, a user wearing a motion capture suit (essentially a Lycra jumpsuit with Velcro for attaching infrared markers) would walk on the treadmill to capture their movement – clusters of markers on the suit were placed around joints such as the ankle or knee which would be used to capture the position and rotation of that joint.

A Python script was written to capture data recorded by the software, which was plotted on graphs and compared against similar data recorded by the Kinect cameras. The system was indeed far more accurate than the previously used Kinects, and while Kinects were cheaper and easier to set up for use in hospitals, the OptiTrack system was ideal for use in capturing reference data.

Here are a few images of the system in use: